Creating a MetaHuman with an Intel RealSense depth camera

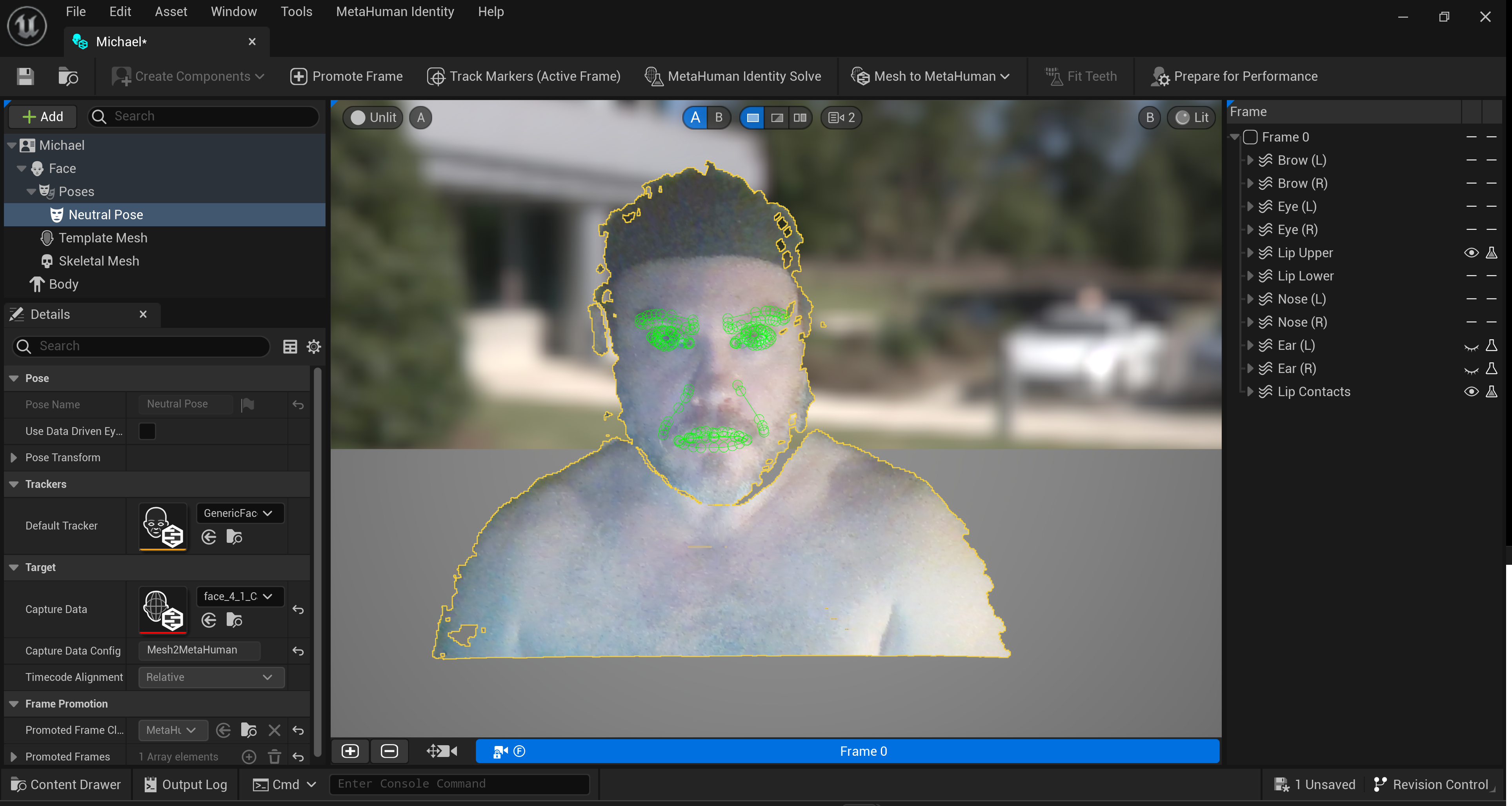

I used a depth camera to take a single image and turn it into a mask for an Unreal Engine Metahuman.

For class, I was interested in making some characters that look like me for a game concept. There are two ways to make a MetaHuman for Unreal Engine: one using their Creator web-based engine in a way that blends together pre-existing faces, and two, using a mesh of a human face to match against the neutral pose.

I tried both of these methods to create something that was similar to my facial characteristics. The metahuman on the right in the image below is the Metahuman Creator engine, and the one on the left is made by applying a depth mask.

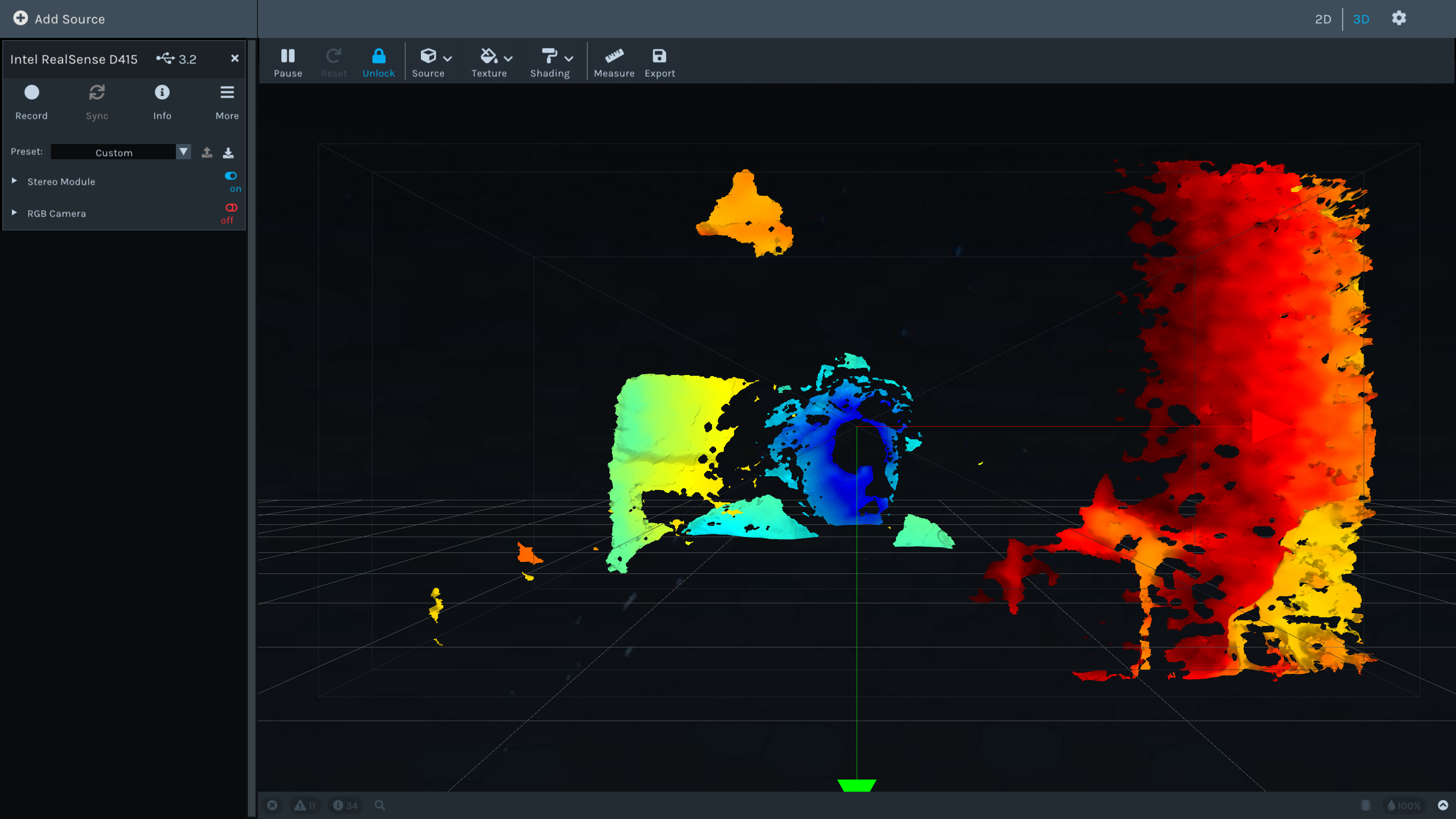

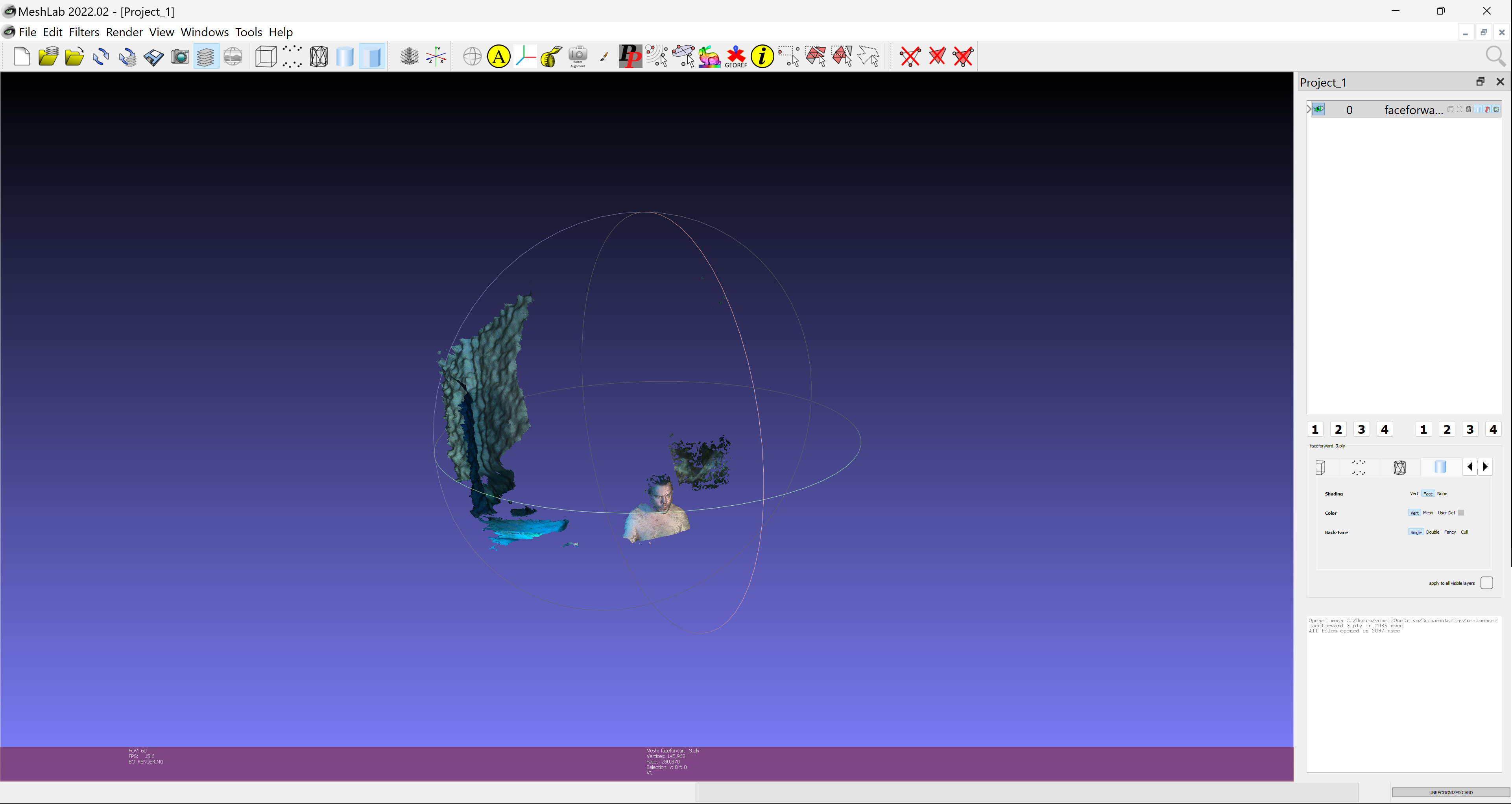

Along the way I tried to use Reality Capture and MeshLab (for conversion to the file format RealityCapture would accept) to apply more that one point cloud and video to the mesh, but in the end it was only necessary to capture a single .ply point cloud file frame from Intel's RealSense SDK utility.

Using Blender, one can open and save this file to FBX for import into Unreal. It was necessary for me to use Blender and Meshlab to re-orient and remove the background material to focus on only the face in the foreground.

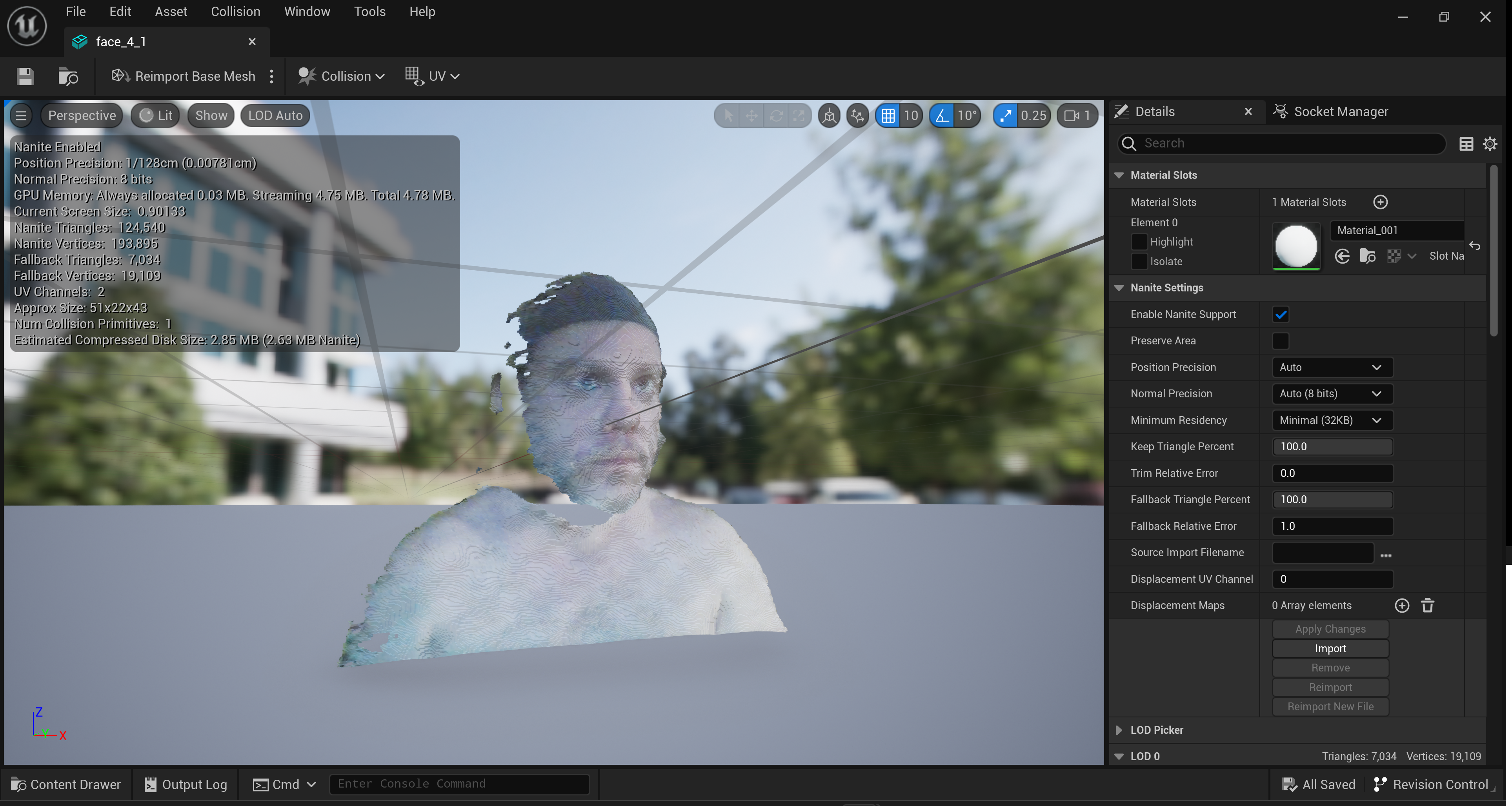

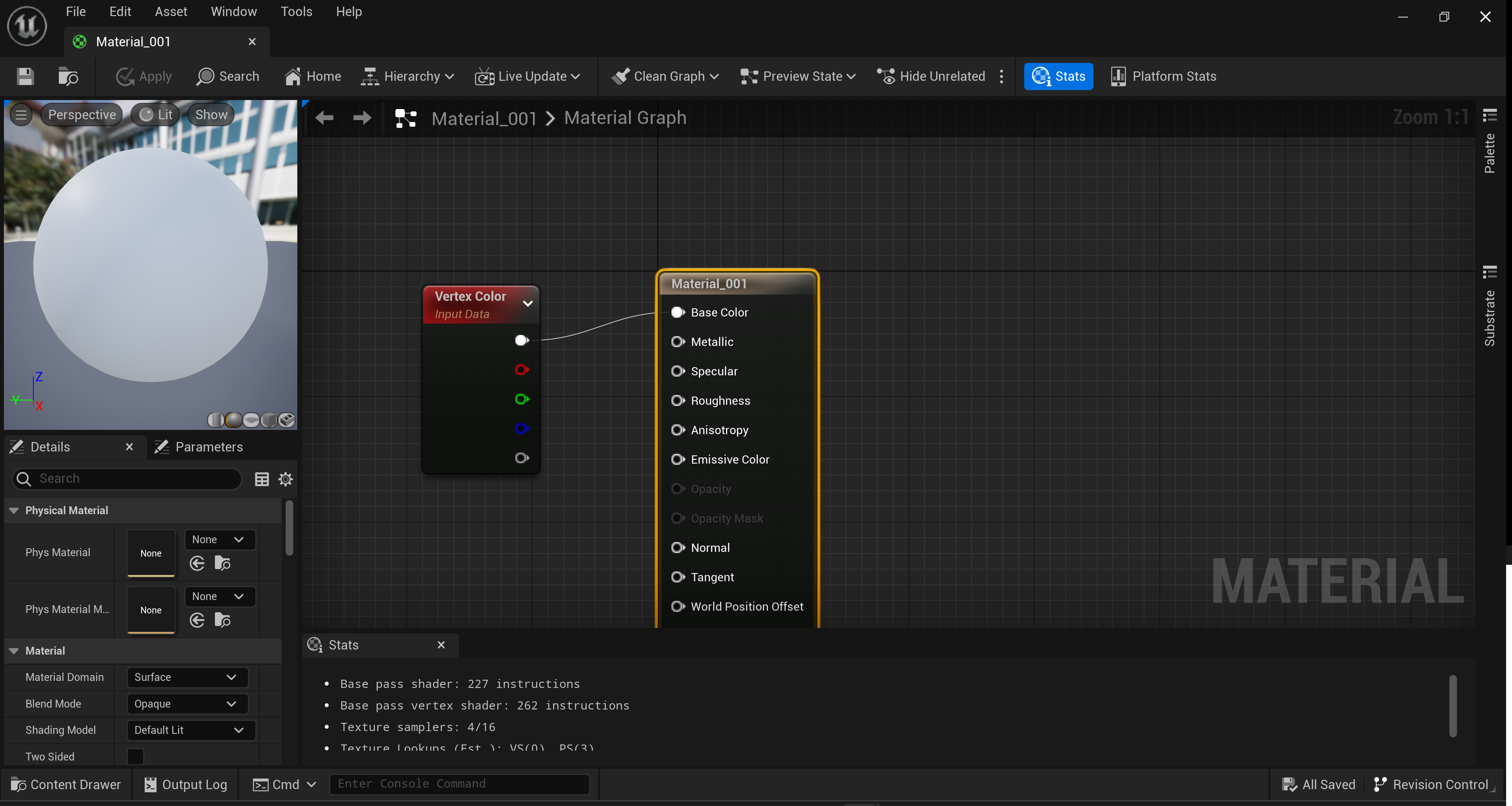

The color information from the point cloud is embedded in the vertex, so the Unreal Engine material for the mesh must have a VertexColor node connected to the base color. Technically, the metahuman plugin needs color information for the eyes in order to distriguish where things are. I tried and confirmed it does not work on an untextured mesh.

After importing the mesh into Unreal Engine from Blender, I followed these instructions to build the MetaHuman.

Copyright 2023 Secret Atomics